NWM Point Data Loading#

Each river reach in the National Water Model is represented as a point, therefore output variables such as streamflow and velocity correspond to a single river reach via the feature_id.

This notebook demonstrates the use of TEEHR to fetch operational NWM streamflow forecasts from Google Cloud Storage (GCS) and load them into the TEEHR data model as parquet files.

TEEHR makes use of Dask when it can to parallelize data fetching and improve performance.

The steps are fairly straightforward:

Import the TEEHR point loading module and other necessary libraries.

Specify input variables such a NWM version, forecast configuration, start date, etc.

Call the function to fetch and format the data.

# Import the required packages.

import os

import teehr.loading.nwm.nwm_points as tlp

from pathlib import Path

from dask.distributed import Client

Specify input variables#

Now we can define the variables that determining what NWM data to fetch, the NWM version, the source, how it should be processed, and the time span.

CONFIGURATION = "short_range" # analysis_assim, short_range, analysis_assim_hawaii, medium_range_mem1

OUTPUT_TYPE = "channel_rt"

VARIABLE_NAME = "streamflow"

T_MINUS = [0, 1, 2] # Only used if an assimilation run is selected

NWM_VERSION = "nwm22" # Currently accepts "nwm22" or "nwm30"

# Use "nwm22" for dates prior to 09-19-2023

DATA_SOURCE = "GCS" # Specifies the remote location from which to fetch the data

# ("GCS", "NOMADS", "DSTOR")

KERCHUNK_METHOD = "auto" # When data_source = "GCS", specifies the preference in creating Kerchunk reference json files.

# "local" - always create new json files from netcdf files in GCS and save locally, if they do not already exist

# "remote" - read the CIROH pre-generated jsons from s3, ignoring any that are unavailable

# "auto" - read the CIROH pre-generated jsons from s3, and create any that are unavailable, storing locally

PROCESS_BY_Z_HOUR = True # If True, NWM files will be processed by z-hour per day. If False, files will be

# processed in chunks (defined by STEPSIZE). This can help if you want to read many reaches

# at once (all ~2.7 million for medium range for example).

STEPSIZE = 100 # Only used if PROCESS_BY_Z_HOUR = False. Controls how many files are processed in memory at once

# Higher values can increase performance at the expense on memory (default value: 100)

IGNORE_MISSING_FILE = True # If True, the missing file(s) will be skipped and the process will resume

# If False, TEEHR will fail if a missing NWM file is encountered

OVERWRITE_OUTPUT = True # If True, existing output files will be overwritten

# If False (default), existing files are retained

START_DATE = "2023-03-18"

INGEST_DAYS = 1

OUTPUT_ROOT = Path(Path().home(), "temp")

JSON_DIR = Path(OUTPUT_ROOT, "zarr", CONFIGURATION)

OUTPUT_DIR = Path(OUTPUT_ROOT, "timeseries", CONFIGURATION)

# For this simple example, we'll get data for 10 NWM reaches that coincide with USGS gauges

LOCATION_IDS = [7086109, 7040481, 7053819, 7111205, 7110249, 14299781, 14251875, 14267476, 7152082, 14828145]

Start a local dask cluster#

TEEHR takes advantage of Dask under the hood, so starting a local Dask cluster will improve performance.

n_workers = max(os.cpu_count() - 1, 1)

client = Client(n_workers=n_workers)

client

Fetch the NWM data#

Now we just need to make the call to the function.

%%time

tlp.nwm_to_parquet(

configuration=CONFIGURATION,

output_type=OUTPUT_TYPE,

variable_name=VARIABLE_NAME,

start_date=START_DATE,

ingest_days=INGEST_DAYS,

location_ids=LOCATION_IDS,

json_dir=JSON_DIR,

output_parquet_dir=OUTPUT_DIR,

nwm_version=NWM_VERSION,

data_source=DATA_SOURCE,

kerchunk_method=KERCHUNK_METHOD,

t_minus_hours=T_MINUS,

process_by_z_hour=PROCESS_BY_Z_HOUR,

stepsize=STEPSIZE,

ignore_missing_file=IGNORE_MISSING_FILE,

overwrite_output=OVERWRITE_OUTPUT,

)

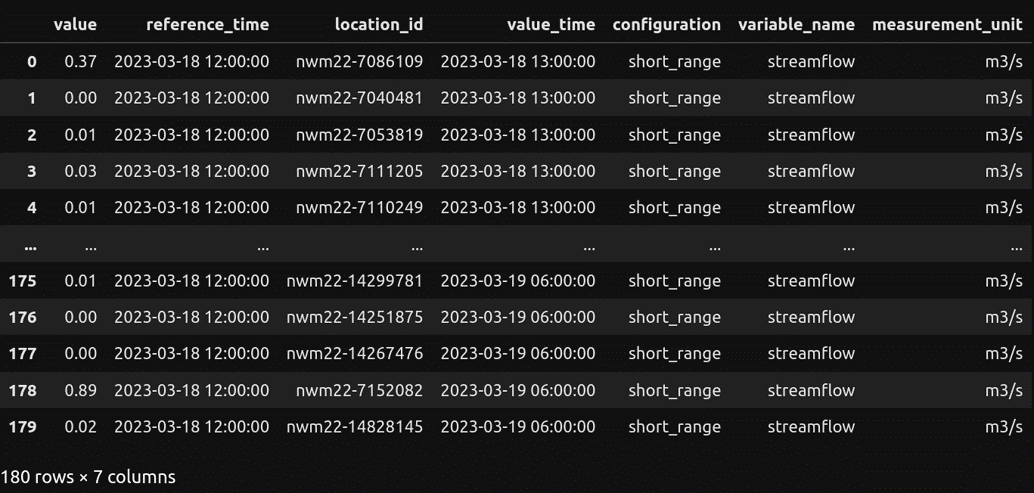

The resulting parquet file should look something like this: